I’ve been looking at Azure for a long time, hearing all the glowing reports about how well integrated the platform is and how easy it is to move things get up and running. I’ve been contemplating moving to some sort of virtual host from my current physical server setup as I can certainly see the appeal of having a managed platform that can be remotely administered, re-configured on the fly if needed and provide an easy way to backup and duplicate the environment since it’s all based on repeatable VM images. The promise is great.

This post is kind is a bit of a rant and chronicles some of the issues I’ve run into with Azure VMs. It’s not meant to bash, but rather I’m trying to document what I experienced and to see if maybe – just as is often the case – I hit a weird edge case. I’m also interested in what others see with Azure in terms of performance and stability and consistency.

For me personally I find myself in an odd position where I have around 20 web sites that I currently host on my own physical box that I run at my ISPs site. Most of these are personal sites like this blog, a message board, my online store and a host of other internal business services. I also host a couple of small sites for some of the clubs/associations I belong, plus a small commercial site for my girlfriend. In short the hosting I’m talking about is essentially personal hosting that is not paid for out of a commercial budget, but personal money I have to lay out every month, which changes the perspective of how I’m approaching Azure here.

Where I’m at now

My old server is aging – it’s a Server 2008 machine that’s going on 6 years old. The hardware is doing just fine for the apps that I’m hosting – two of which generate a fair bit of traffic in excess of a million ASP.NET hits month each. Still the hardware is barely even taxed at all by this. However, I’d like to upgrade the server to a more recent version of IIS that supports WebSockets and also the forthcoming ASP.NET vNext which won’t run on Windows Server 2008 (or at least it doesn’t currently) so I’m due for a complete repave.

My current choices are moving everything off the box to a virtual machine – Azure or otherwise (and after moving a few sites to a new VM I’m leaning towards the latter by far), or installing a newer version of Windows on my aging server. To make the decision even more difficult, my hosting arrangement with my ISP is such that I have a very generous grandfathered rate that makes hosting there very cheap – pricing that Azure can’t even begin to touch. I don’t expect the same rate for anything I move to but I’d like to be at least in the ball park, which as it turns out is not easy with Azure – comparatively speaking it’s very expensive when trying to match the existing hardware I’m running on.

Experiment: Move my Blog to Azure

My blog is one of the apps running on my server and it’s the busiest of my personal sites running on the old server. The blog is fairly isolated app without dependencies, but it does have a boatload of data in it between blog entries and comments over the years (as most of you know my posts tend to be looooong).

In any case, since I have an MSDN subscription and Azure credits I figured I’d take the opportunity to move some of my sites off to a new VM on Azure and experiment to see what kind of performance I really need for a VM. I’ve started this process on several occasions in the past and each time I’ve backed off because performance was either not anywhere near what I was hoping for or the price immediately shot up to a level that I just can’t justify. Since then prices have come down some, but still not quite in the range I can really deal with for the 20 or so personal sites I’m running on my box.

To start I moved my blog over onto the VM as my blog is by far the busiest site that I run and it would be a good indicator for CPU and bandwidth usage and how that would fit into the Azure hosting plans.

Installation and Deploying

I decided to create a virtual machine with the following setup:

- A2 Instance (2 cpu 3.5gig) – switched to D2 later

- Second data vhd in Blob Storage

The process of creating new VMs and getting them up and running is very easy and the process takes about 10-15 minutes until you have a live instance to RDP into. That part’s really nice and it works well.

Do it right: Don’t use Quick Create

However, it wasn’t all smooth sailing at first, because I took the easy route at first. I had made the mistake of creating the VM with the Quick Create option and while that works just fine it creates a mess of dynamically named components. There’s a whole slew of them Cloud Service, Vm, Storage Account, Storage Container, Disks and VHDs. Additionally I went through the options too quick and ended up creating my site in the Western Europe region instead of West US.

Deleting VMs and associated resources is a pain

End result I had to kill everything and start over. Now that should be an easy task, but it turned out to be quite a pain as you have to delete all the component pieces individually and in the right order. Otherwise components end up locked and you can’t delete the parent components. I also am not fond of all these nested containers of container of containers. I’m sure that’s needed for the implementation, but why do we have to see this information in the portal’s UI? All I should have to do is delete the VMs and delete the VHDs.

To make things worse files ended up being locked. I was able to delete the VMs but that left behind the VHD disks and storage accounts. Unfortunately these were locked and for the better part of an hour I was unable to delete them.

Manually install everything

After I finally managed to completely clean out my Azure account to start fresh, I re-did the install properly by individually creating each of the components. Storage account first (for the second disk to attach) then manually create the Windows VM and go through the long form Wizard to name everything explicitly. It took a little more time, but now at least everything is reasonably organized. Much nicer and frankly the way it should be – the Quick Create Wizard should not even be an option!

Up and Running

Once I was up and running I logged on with RDP tried to install a handful of apps required on the server. The main piece was activating the Web Server role and installing IIS, and SQL Server locally (yes I know this should be off the machine, but I don’t have big db access so the server is and will stay local on the box).

Slow Desktop

As mentioned I started with a A2 instance which is 2 cores and 3.5gig. Now typically I would peg that at the low end of the hardware spectrum for a Windows Server box, but I figured this would be a good place to start for hosting. I quickly found out that running an A2 instance even to install a bunch of applications from the command line was unbearably slow. I used Server Manager to enable IIS which took seemingly forever (at least 20 minutes) and then used Chocolatey to install a bunch of my utilities and dev tools first including Chrome. It took insanely long – at one point I went to the store to get lunch and came back and still found SQL Server installing when I got back an hour later.

The machine was unbearably slow using RDP. The UI would constantly hang up and apps would show “Not Responding” whitescreening even the Windows desktop. I’d get a burst of activity when everything works smooth, followed by complete lockups for several minutes where I could move the mouse around the desktop but nothing is responding.

For example opening Chrome (after initial install and several times of opening) took nearly a minute. Trying to bring up TaskMan failed altogether – or so I thought until after about 5 minutes 5 TaskMan Windows popped up because I had clicked multiple times thinking my click hadn’t worked.

nGen?

When I did manage to get Resource Monitor to pop up I took a look to see what’s going on that’s making the virtual machine so crazy slow and to my surprise I noticed that CPU usage was low (under 20%). But I did find disk activity maxed out. Taking a closer look I noticed that the mscorvw.exe was hogging disk access like crazy. This the ngen compilation service that runs in the background after .NET framework installs or updates and apparently it was seriously hogging machine resource to the point that the machine was slowing to a crawl.

I figured I’d call it a night and let ngen finish after explicitly forcing it to complete all compilation with:

** ngen.exe executequeueditems**

Next morning when I came back I restarted the VM and when it finally came back performance was at least usable, if still very slow in RDP. No white screen lockups but still massive lags between any click operation and painfully slow execution of anything that is actually running on the machine. I even turned off server optimization and put the machine into ‘desktop’ mode, which made next to no noticeable difference.

To give you a point of reference how slow this stuff is: It took SQL Server Management Studio over 2 minutes to come up for the first time after a reboot.

By comparison my 6 year old 4 core physical server feels like you’re there – on a slightly older desktop machine that it is. The RDP connection feels almost live with only the slightest bit of lag and applications run with appropriate speed. It doesn’t compare to my current gen laptop, but it certainly matches the perf I would expect from a 6 year old machine. On the Azure machine – even though the CPU seems to indicate no heavy load – everything is just sludging along.

And what’s really strange is that even though the machine feels nearly unresponsive, running Resource Monitor or Taskmans perf tab, I see barely any CPU activity. The CPU hovers in 15-30% in spikes but nothing more than that. Sure seems odd, and looks like a case of a way over-provisioned machine.

Trying other Azure Instance Types

At this point I decided that maybe an A2 instance is simply not enough hardware. A2 is 2 core with 3.5 gig of memory which to me seems pretty low, but looking at resources used on the machine at this point, the A2 doesn’t look like it should be having problems. Committed memory is under 2gig, and CPU usage is generally in the 15-30% range.

I tried loading up an A3 instance anyway to see if the extra memory or CPU would help. A3 has 4 cores and 7gig which is more comparable to my physical box. It seems odd to want to add more CPU when the CPU doesn’t seem to be taxed on the A2 setup. However, as expected I saw no performance improvement whatsoever with that instance type. The CPU usage was the same (but spread over 4 processors), but actual performance of the desktop was absolutely no better than before.

On the plus site it’s extremely cool to be able to take an existing application and throw it onto a new hardware setup without having to change your Windows config – that’s pretty awesome and one of the big selling points of a Cloud platform.

I did get a new IP address so if the slow perf was an issue of ‘noisy neighbor’ (one or more busy sites on the same physical hardware), then this problem now carried across multiple machines.

D2 Instance – better but still not good

Next I tried the new D2 instance. D2 is 2 cores – 7gb, but running on SSD drives. And that finally DID make a decent difference. Performance improved drastically with a D2 instance and the machine finally felt like an approximation of what it should feel like given the hardware that is running it.

So it seems to point at the problem being the abysmal default drive performance of the A2 and A3 instances which seems insane. While I can understand somewhat slower perf with a non-SSD drive, it doesn’t quite account for the HUGE difference in things like launching apps and plain interactive behavior of the desktop which are not typically disk bound especially on repeated loads. There’s gotta be more to it than just the SSD drives.

I ended up switching back and forth a few times between different instance types – getting new IP addresses most times and presumably jumping around different machines to mitigate the noisy neighbor problem, but every time I went back to A2 and A3 instances I saw the same unbearably slow performance. It never got any better. And switching back to D2 I saw improved performance but still not anywhere even remotely close to the performance I’m used from my physical box.

I’ll have more on performance when I talk about some Web app testing later on.

D2 for Installation

For the time being – while configuring the machine - I left the machine running on D2 so I could actually get shit done configuring the machine. On A2 it was just too painful to even run the command line where the CLI typing couldn’t keep up! That tells you how bad it really is!

To be fair this ability to switch instance types is one of the very cool and truly useful features of a cloud platform – you can temporarily set up a machine to run a faster (or slower) instance and then kick it back to your default setup which can be very, very useful and lets you get stuff done.

With VMs you have to be careful with live installations though – reconfiguring a VM means re-provisioning and that takes some time. I’d say for a VM to start back up in a new mode and be reasonably functional takes about 10-15 minutes and it will be pretty slow for a while after that after all the components on the box update, indexing and ngen runs again…

I also find it odd that the Azure portal shows VMs as ‘running’ after a few minutes, but it takes another 5 minutes more before the VM actually becomes accessible to the Web site or RDP. It takes closer to 10 minutes to reprovision. Machine reboots also seem to take quite a long time… I suppose this all comes back to the slow processor performance.

Installing My Web Blog app

Despite all of this, I decided to at least get a Web app up and running to see how an actual running app would fare. After all, background services tend to perform better and maybe, just maybe these Azure instances are optimized for services.

The WebLog is a pretty simple app, but it pulls all data from a local SQL Server database. The local Sql DB file is installed on the C drive since that’s the SSD drive at least on the D2 instance.

So I installed all needed IIS components (next time I set up a Chocolatey script for this!) and configured the WebLog Web site. Created a Web site and then published the WebLog from my dev machine to the server. I backed up the database on my physical server and downloaded it from new machine and restored the backup into my VM’s Sql Server.

The WebLog app came up first try which was good to see… I had to tweak a few disk permissions to allow local file writes for publishing posts and images, add the SSL cert for some of the admin tasks, but otherwise this process was pretty painless. A nice reality check… A software is easy compared to the Admin crap 😃

Stress Testing

At this point I had dialed the VM back to the A2 instance and let it run for a while, explicitly ngen’d and put the server back into Background Service mode. I also let the server run for a bit under a secondary domain (http://weblog2.west-wind.com) which I would hit occasionally to keep the server up and running and give the OS some time to stabilize with updates and housekeeping compilation (indexing, ngen etc.).

Initially I tried running external stress tests over the open Internet but quickly found out that Azure has some Denial of Service filters in front of their servers that make external load testing tricky. The results were very slow and erratic as heck, even though the live site seemed responsive in the browser. I figured that Azure is throttling traffic so remote testing is not really an option or even a good guideline for testing machine performance anyway. So don’t bother externally testing load of an Azure VM or site.

So afterwards I RDP’d back in and installed and fired up WebSurge locally to load up the Web app to see what kind of performance I could get out of it. The nice thing about this is that it’s a simple test that easy to repeat on both machines running exactly the same Web application.

This was a** total disaster** on the A2 machine. Tests were very slow responding to requests and almost immediately timing out when sending continuous load:

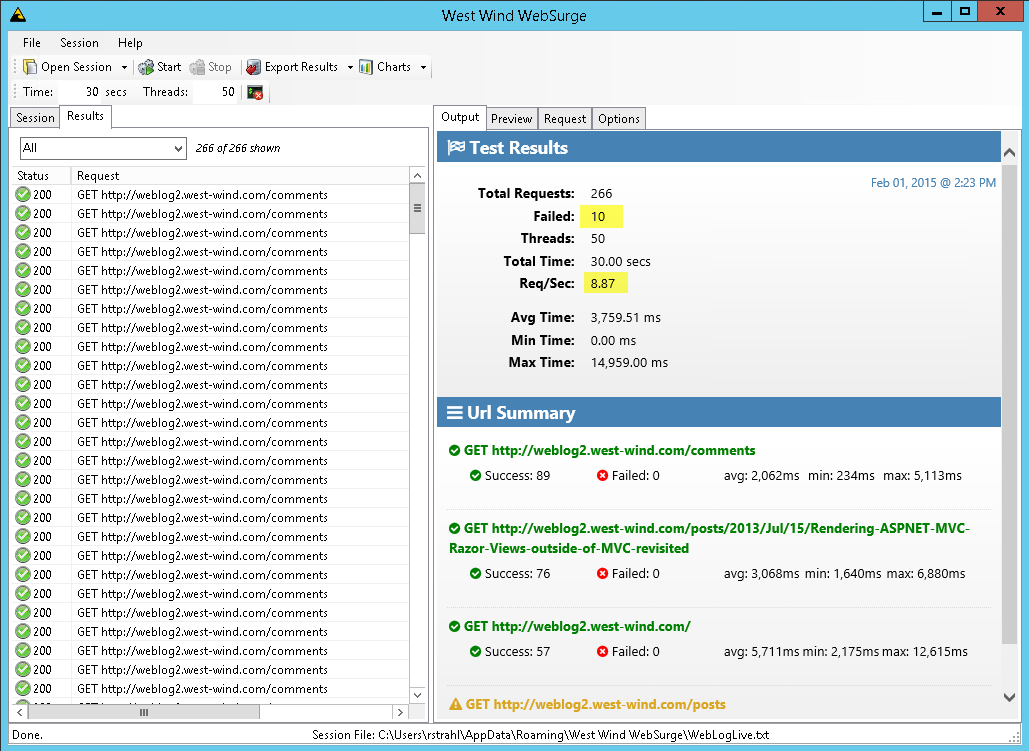

A2 Instance – local machine:

9 requests a second is pretty sad for performance, but even worse there were 10 failed requests that actually timed out. Also check the request times that are over 2 seconds in the best case and close to 10 secs in the worst case under load.

By comparison and for reference, here are the results from my live server running the very same setup:

West Wind 6 year old Physical Server – 4 core low end Xeon

That’s over 200 req/sec for these very content heavy pages which is more than 20x higher load than the A2 instance!

Next I switched the machine back in D2 to see how that would stack up.

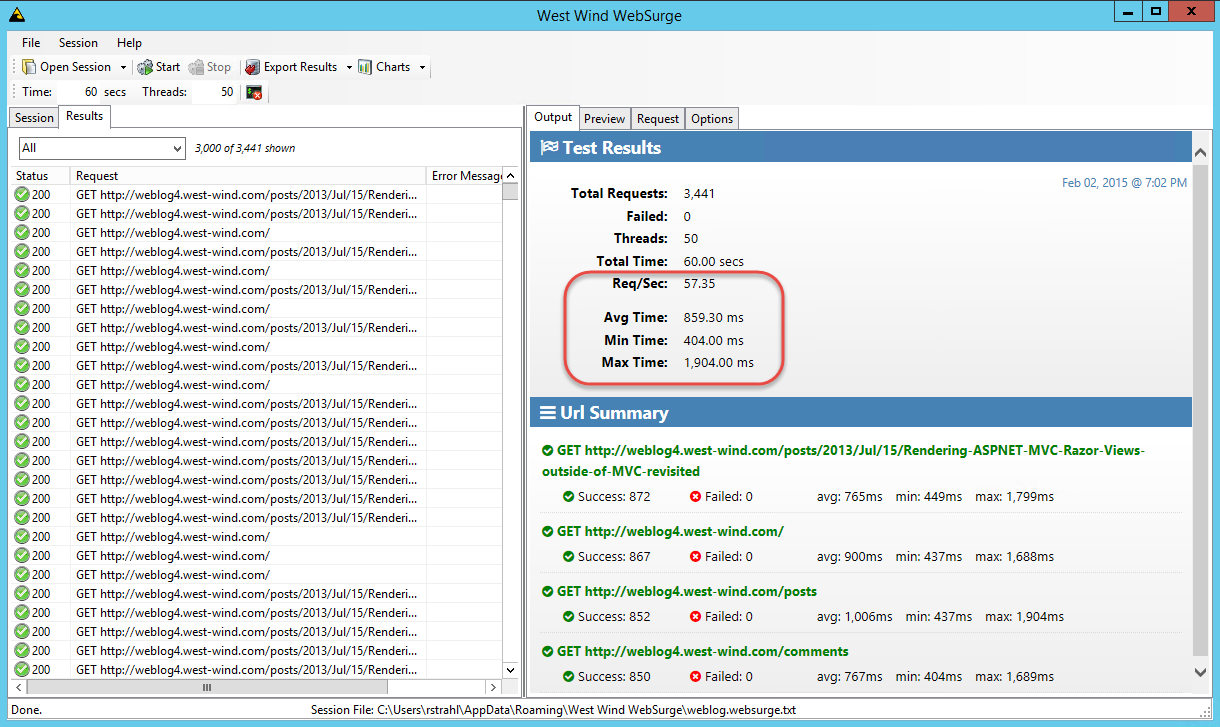

D2 Instance – local machine:

You can see here I’m getting 54 req/sec which is a lot better but still not anywhere close to the performance of the physical box. Test here also ranged wildly from 55+ req/sec down to less than 10 req/sec – often in short succession to each other. I can’t make sense of these tests – there’s no consistency at all here.

Even in the high range when the tests are running well, this is still nearly 5x less than what I get** consistently** on my server.

After quite a bit of back and forth testing on both A2 and D2 instances I’m also seeing the same performance flux across all machines. I logged on again today as I’m writing this and trying the tests again, and now I’m seeing barely 10 req/sec for the D2 machine and it too was showing bunch of timeout errors. Then I log back in a couple hours later and try again and I’m back up in the low 50’s as before.

Keep in mind this machine has currently no traffic other than my testing accesses. I see 60-70% performance swings which is totally unacceptable… By contrast my live server is pretty steady in tests which vary by less than 3% and that is with actual traffic on the various other sites that are running on that server. IOW, that’s what I call predictable performance – Azure, not so much.

Can it really be this bad?

So, at this point I’m thinking I have to be doing something really wrong to see performance that’s this abysmal compared to relatively low end physical hardware and for the performance to be so incredibly erratic. I’ve pretty much ruled out the noisy neighbor problem because of all the jumping around of IP addresses that has been happening.

For the Web stress testing I initially pushed external requests in from my local machine here, but I quickly assumed that Azure’s front end that sits in front of the VM might be doing throttling on the network end to prevent denial of service attacks. That would have explained the erratic behavior of requests. But after also testing locally on machine I see the same behavior running on the machine against localhost.

What’s interesting in local testing I can see the request load getting slower and slower. I start out with maybe a hundred requests that take 100ms or less and then it bumps and slowly the request times are creeping up and up and up until they reach the 15 second timeout. That certainly seems like some sort of throttling is kicking in, but I can’t see anything that would do this *locally*. Anybody have any thoughts of what locally could do this? IIS has Dynamic IP Restriction module which can detect and restrict addresses based on traffic loads, but I double checked and the features are not enabled on this box.

I can’t image that Azure could be sitting between an HTTP client and local connection going against localhost, or could it?

While running tests though I can see the external site when accessed from my machine also start to lag – so that at least seems to corrobarate that I’m not imagining things here – when busy I see 10sec wait times for external requests too. So either the site is basically bogging down due to limited hardware resources or there’s some throttling which affects everything.

And there there’s other indication of slowness via the terrible RDP performance. Even on the D2 instance the UI is laggy as hell, and most applications take forever to launch. Also when hitting some of the longer running Admin links on my WebLog site (cleaning up entries, shrinking Db etc.) these requests that take 10-15 seconds on my live server are timing out the 3 minute request timeout of the server on every hit. So even there performance is seriously lacking.

Expensive

The other issue I see here is competitive pricing. Running even the lowly A2 instance full time is $133 a month – which is roughly the same that I pay today for my physical box hosting. But going to D2 – which at this point seems like the absolute minimum that I could see my self using, almost doubles that amount.

Granted that if you’re running a business that’s generating revenue that’s probably reasonable, but if you start comparing this type of an environment to traditional co-location or machine hosting which is half of that cost and provides significantly better horsepower, it’s really hard to get excited about virtualization.

Sure, there are lots of services that you can take advantage of in Azure and the remote administration and quick standup of resources is pretty awesome – no doubt about it. It’s a different kind of business model altogether. But in the end performance should still matter a lot and the bang for the buck seems pretty low compared to traditional solutions when it comes to performance. The trend here is going the wrong direction.

I realize I kind of have an oddball set up here in that I have many sites I’m running which is why I think I really need a VM to make it cost efficient versus running Azure WebSites and SQL Azure. When I tried to price out running just my Blog on Azure WebSites plus SQL Azure it amounted to almost $100 for the single site (WebSite + SQL Azure). Again the ROI there is not anywhere near the physical box when you figure in 20 sites. Then account for the mish-mash of server software (SQL Server, MongoDb, IIS some old FoxPro stuff) you realize that anything but a VM is not really an option. I’ve also found that even when a hosted solution could make sense I’ve had issues with some piece of .NET not being supported (System.Drawing specifically – I have a few graphics/chart libs I use that just won’t run) so I have several apps that couldn’t run that way anyway.

If in the end I only need virtual machine hosting and no other services, Azure is also very very pricey compared to some other hosters. Specifically I recently looked at Vultr which is hosting a 4 core 7 gig Windows VM for $84/month on SSDs. I’ve signed up but haven’t tried them yet. To be honest I think ~$100 for this type of setup seems to me at least an acceptable rate. On Azure there’s no exact match for this setup but it sits between a D2 ($257/mth) and D3 ($509/mth) instance which seems like a lot especially given the mediocre performance I’ve seen so far. Reviews I’ve seen are favorable for performance – we shall see.

Is this the Trend?

I’ve been dealing with Virtual Machines of various customers in the last few years, and in general I’ve gotten the feeling that VM hosting is a serious step backwards for performance. Most hosted solutions either run on low end hardware that is drastically slower than processors were even a few years ago as well as over-provisioned machines that are yo-yoing all over the place when it comes to performance.

When you get 2 cores assigned – what are you actually getting? 2 cores on a 4 core machine that has also been provisioned for 10 other customers? Yeah that’s going to suck performance wise.

Who measures what the performance of the cores we’re getting in a VM actually is? There’s no easy way to compare performance because it’s totally variable and well ‘virtual’. Most providers tell you a number of cores they provide but not what type of chip and actual speed and how many virtual processors are allocated to each machine/core you’re getting. It could be 4 Atom processors for all we know 😃.

Using VM software you can spin up any number of cores even on a single core system so how can we even be sure that we’re getting ‘2 cores’. Does that even have any meaningful value?

The Azure experience I just related seems to corroborate that exact scenario. So I’m skeptical even though I would absolutely love to move my machine over to a VM.

What about you?

So, what are your experiences with Azure VMs? Am I just being overly negative, or maybe better – am I just missing something that’s not configured right? How about other VM hosting for Windows? What have you tried – what works and is reliable?

**Update 1:

**I’m in the process of installing a Vultr VM right now and just through that process I can see that this VM (4 cores, 7gig) will run circles around any of the Azure VMs I’ve tried at this point. It took me about an hour to get the machine ready and fully installed and the Web app and running (90% with Chocolately scripts – most of the rest for SQL Server Install time). The VM is totally unmanaged – it’s a totally raw Windows image, but hey, for $84/mth and performance that looks somewhat closer to to my old machine’s I’m not complaining.

Once the site and SQL Db were imported from the remote site, running the first batch of load tests resulted in this:

Vultr 4 core, 7gig:

![Vultr[4] Vultr[4]](https://web.archive.org/web/20150205015646im_/http://weblog.west-wind.com/images/2015Windows-Live-Writer/Giving-Azure-another-Try-and-Not-getting_751/Vultr%5B4%5D_thumb.png)

This is getting pretty close to what I get on my physical server box. Further – performance was consistent. Looking at CPU stats I can also see the CPU getting loaded up the way that it should on a stress test like this, which again makes me think that Azure has some sort of throttling going that is somehow detecting the load testing and choking it off.

Anyway – I find it pretty interesting that a small provider can provide a much faster experience for a fraction of the cost of Azure. I would expect Azure to have a premium for all the extra services and convenience, but – I would also expect Azure to lead in or at least be competitive in performance. From what I see here – I can’t say that it is.

Update 2:

After a number of comments and messages that I should not be seeing A2 performance that’s quite this bad I set up another A2 instance – this time using all defaults rather than pre-creating the second drive. And it turns out performance is quite a bit better on that setup (or possibly better luck with noisy neighbors – I dunno). With this setup the UI is reasonably responsive and the load test performance is also looking much better.

A2 instance

This is a LOT better than my previous install and in fact better than the perf for the d2 instance I had before so this is a huge improvement. But in light of having installed a machine on Vultr which is still 3x faster and cheaper it’s hard to get excited about it at this time…

This still begs the question why performance of the other A2 instance is so absolutely terrible. Although I pre-created the storage container, I didn’t precreate the actual boot drive – only the second D: drive so I still can’t account for this terrible performance.

After some more checking with the new A2 instance I can also confirm that the performance seems a lot more steady on this one with the expected < 5% varience in tests at various times.

One upside to all of this is I’m getting together a pretty good script to configure a machine quickly. The only manual install and huge time sink in the whole process is the freaking SQL Server install.

Other Posts you might also like